How to use IMPORTXML in Google Sheets to collect data

You want collect data on the internet... quickly?

Maybe you want to copy a table from the site, or maybe you want to quickly retrieve SEO elements from a competitor.

IMPORT.XML is here to help you automate your operations of collecting data in Google Sheets.

What is the IMPORTXML function?

The IMPORTXML function in Google Sheets imports data from various types of structured databases (data types), including XML, HTML, CSV, TSV, and RSS and ATOM XML feeds (atom xml feeds).

It extracts information from any XML field, that is, any field surrounded by HTML tags (and therefore web pages), like <balise>and</balise>.

You can use it to import information that is publicly available from the web.

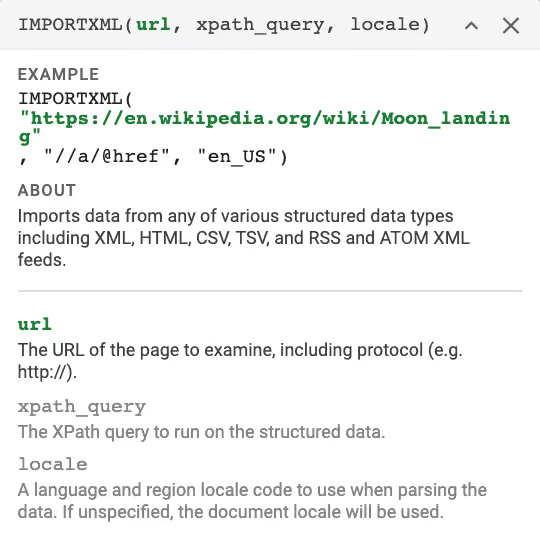

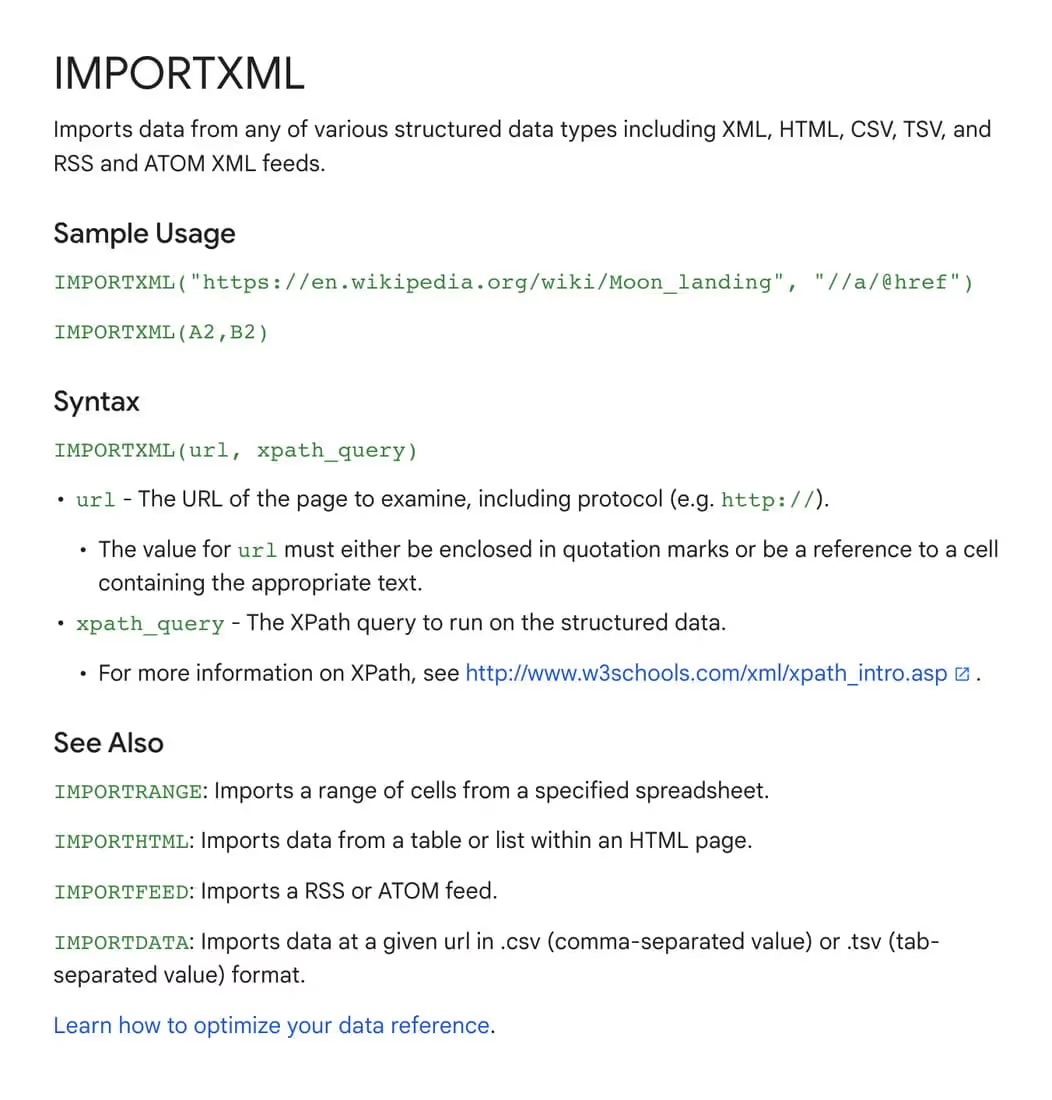

The syntax of the IMPORTXML function is as follows:

You are therefore not allowed to use it with websites that you need to connect to access it, for more information, the article below should interest you.

READ MORE: Is web scraping legal?

Basics you need to know to use IMPORTXML

importXML function: Imports data from various types of structured data (xml html csv tsv ross).

The base of a URL

- The argument url is the URL of the web page from which you want to import data (data types),

- Indicate the protocol (https://or http://).

- The URL value should be either in quotation marks or a reference to a cell that contains the appropriate text.

The basis of an XPath Request

- The argument xpath_query is the XPath query to be executed on the data in the given URL.

- Each result of the XPath query is placed in its own row in the spreadsheet.

- XPath is a query language used to retrieve information elements from sites.

- It has its own syntax that you can learn in detail from online resources.

There are a few basic rules for creating your own argument. xpath_query :

The double slash:

- The double slash”//“means the selection of all the elements of the tag that is written after it.

- For example: //h1 means select all h1 Title items, //p means all p items, and so on.

The class

- [@class = "] means that you want to select only items that meet this given criterion.

- For example, //h1 [@class ='title'] only imports h1 Title elements whose class attribute is “title”.

Elements

- You can define these elements on multiple levels as they appear in the site source.

- For example, //h1/span will look at the h1 elements and then the span elements that they contain.

XPath Basics

To use XPath, you need to understand the basics of HTML.

- Website data is displayed with HTML and stored in XML format.

- XPath is the language used to query these databases.

- You can customize these XPath arguments based on what you are looking for on the site.

XPaths examples for marketers

Check out these useful XPath extraction shortcuts to gather data from the web more quickly:

- All links on a page: “//@href”

- Extract all internal links from a page: “//a [contains (@href, 'example.com')]/@href”

- Extract all external links from a page: “//a [not (contains (@href, 'example.com'))]/@href”

- Page title: “//title”

- H1 (s): “//h1"

- Meta description: “//meta [@name ='description']/@content”

- Canonical: “//link [@rel ='canonical']/@href”.

- Robots: “//meta [@name ='robots']/@content”

- Hreflang attributes: “//link [@rel ='alternate']/@hreflang”

The function IMPORT.XML allows you to import site data directly into your spreadsheets

All you need to do is consult the source code of the website from which you want to import data, find the HTML element that can be used, and retrieve it using XPath queries.

What are the steps to use IMPORTXML in Google Sheets?

1. Start by opening a new Google sheet

First, we open a new, blank Google Sheets document:

For this example, you can use the Google Sheets sample sheet:

Google Sheets example sheet: Data collection with ImportXML

2. Add the content you need to extract

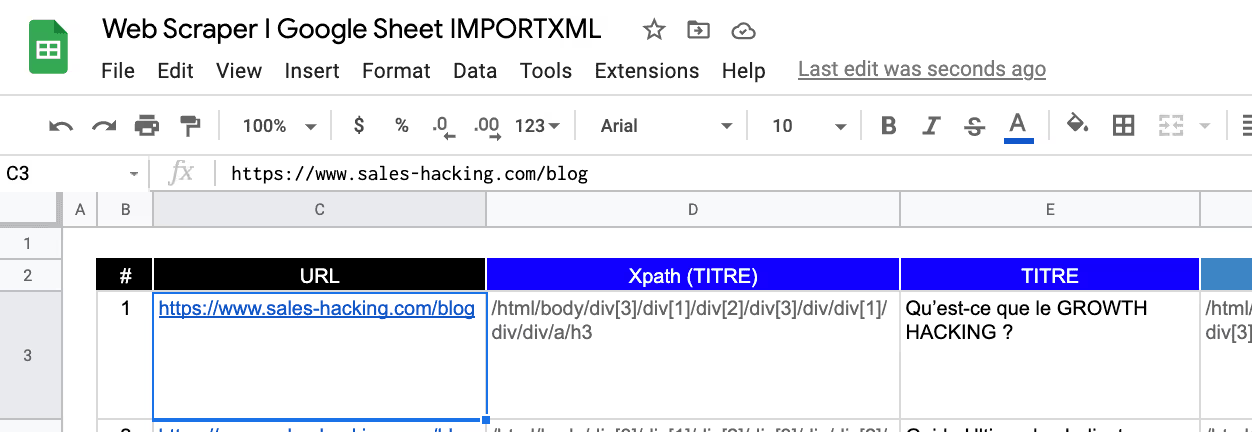

Add the URL of the page (or pages) whose information we want to extract from the web.

In our case, we are going to extract the page titles of all the articles, as well as their URL and meta descriptions.

So our starting URL will be as follows: https://www.sales-hacking.com/blog

3. Finding the XPath

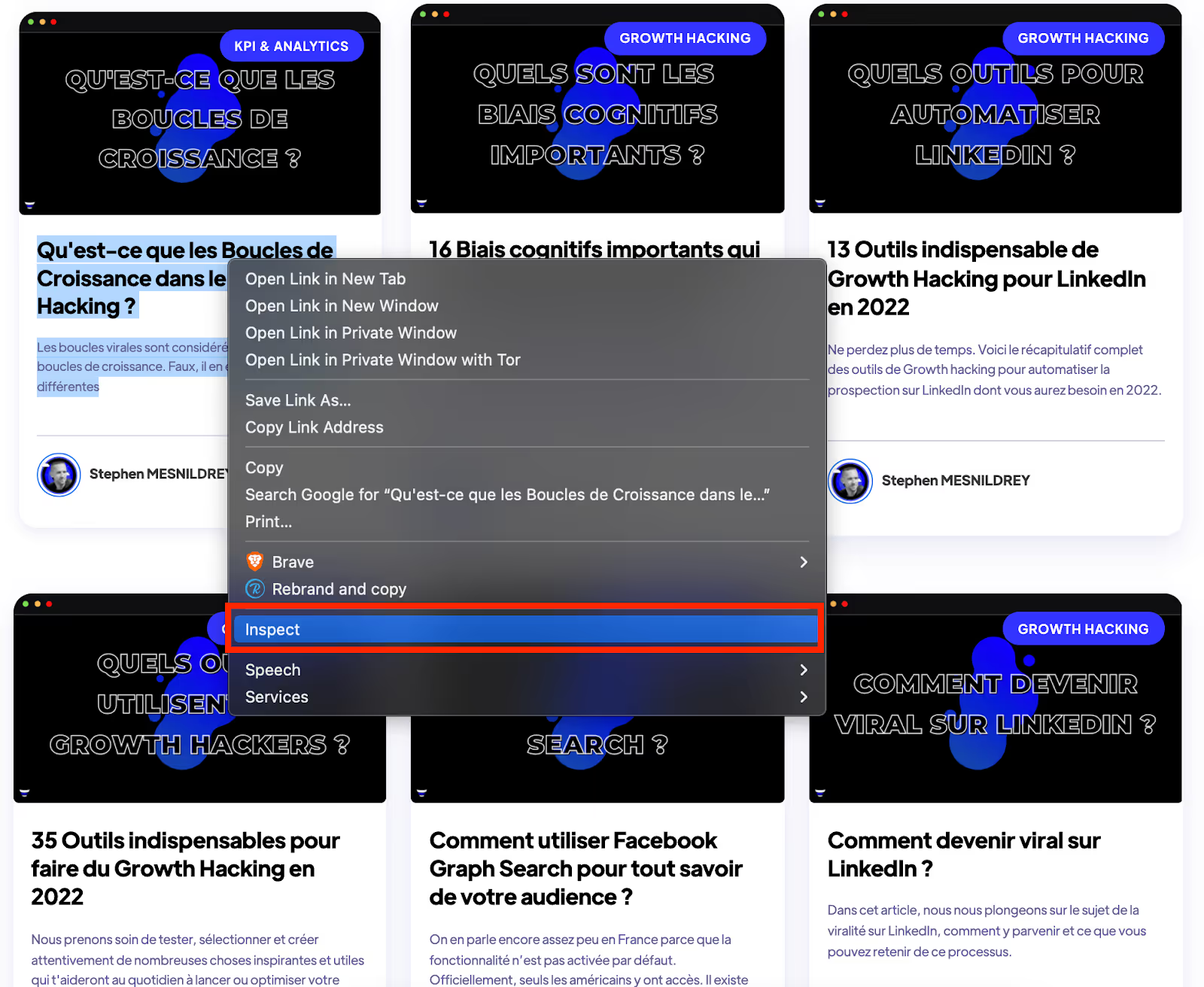

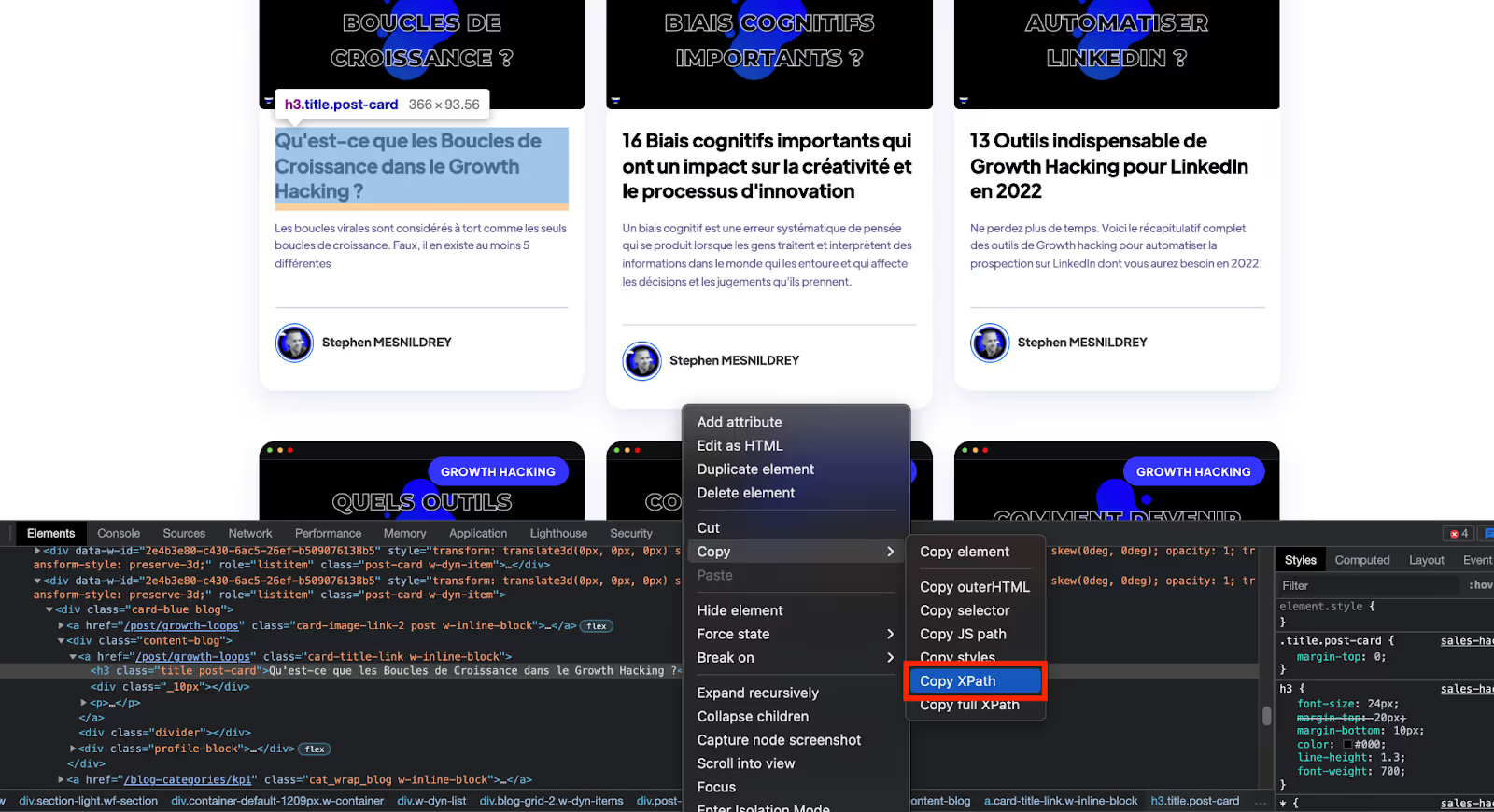

Use the Navigator Inspector

In order to find what the XPath looks like and how to find it simply, all you have to do is use a simple tool that is present on all modern browsers: The Inspector.

This XPath will allow us to specifically retrieve each information desired on the page in such a way as:

- Relative : I want all the blog titles on the web page

- Specific : I only want the first 10 or 30 article titles on the web page or only 1 in particular

In our example, let's start with the titles of the last 30 articles.

- Go to your preferred browser.

- Once you have hovered over the title of one of the articles, right-click

- And select Inspect (Inspect in English)

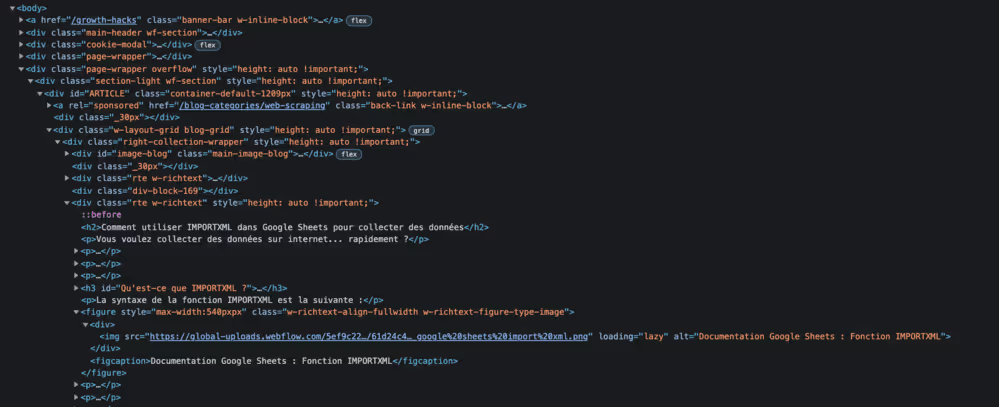

Once clicked the Browser will show you the HTML code of the web page as below:

Doesn't that speak to you? Don't worry, we'll see in the step below that the hardest thing for you to do will once again be to make a”Right click“

Copy/Paste the Xpath

The inspector (generally available in the Developer Tools section of your browser) allows you to directly select the item page for which you want more information:

- Select the part you are interested in on the page with the Inspector, i.e. in this example: the title of one of the articles

- Make sure the article title is still selected and highlighted, then right-click again and choose Copy > Copy XPath.

That's it, you've just selected the XPath that you're going to use right away in Google Sheets.

4. Extracting data into Google Sheets

To try it yourself in the Google Sheets example, you can use the example sheet below (Create a copy to use it):

Google Sheets example sheet: Data collection with ImportXML

Note : Data collection for the blog posts in this article has been voluntarily limited to 10 items to avoid any restrictions by Google services.

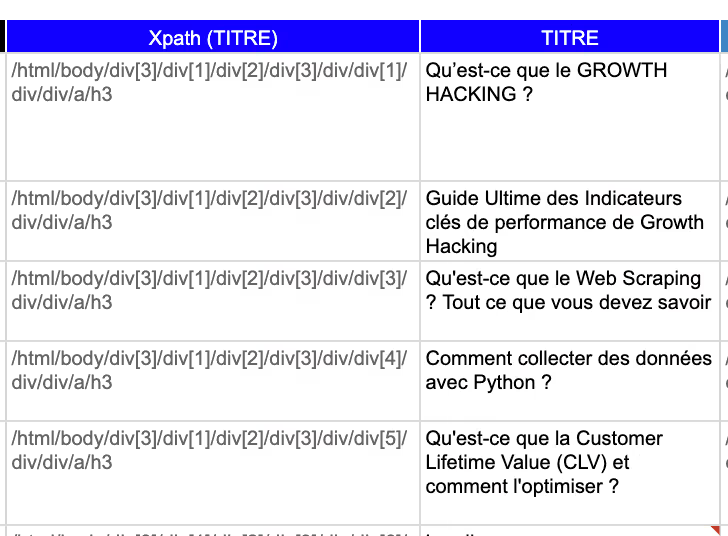

Retrieving blog title articles

Back in your Google Sheets document, introduce the IMPORTXML function as follows:

Data can also be retrieved using specific XPaths in Google Sheets.

For example, in step 3, we selected the XPath for a specific article which is this:

=IMPORTXML (B1,” /HTML/body/div [3] /div [1] /div [2] /div [3] /div/div ["&B3&"] /div/div/a/h3")

First of all, in our formula, we replaced the page URL with the reference to the cell where the URL is stored.

secondly, when you copy the XPath, it will always be enclosed in quotation marks.

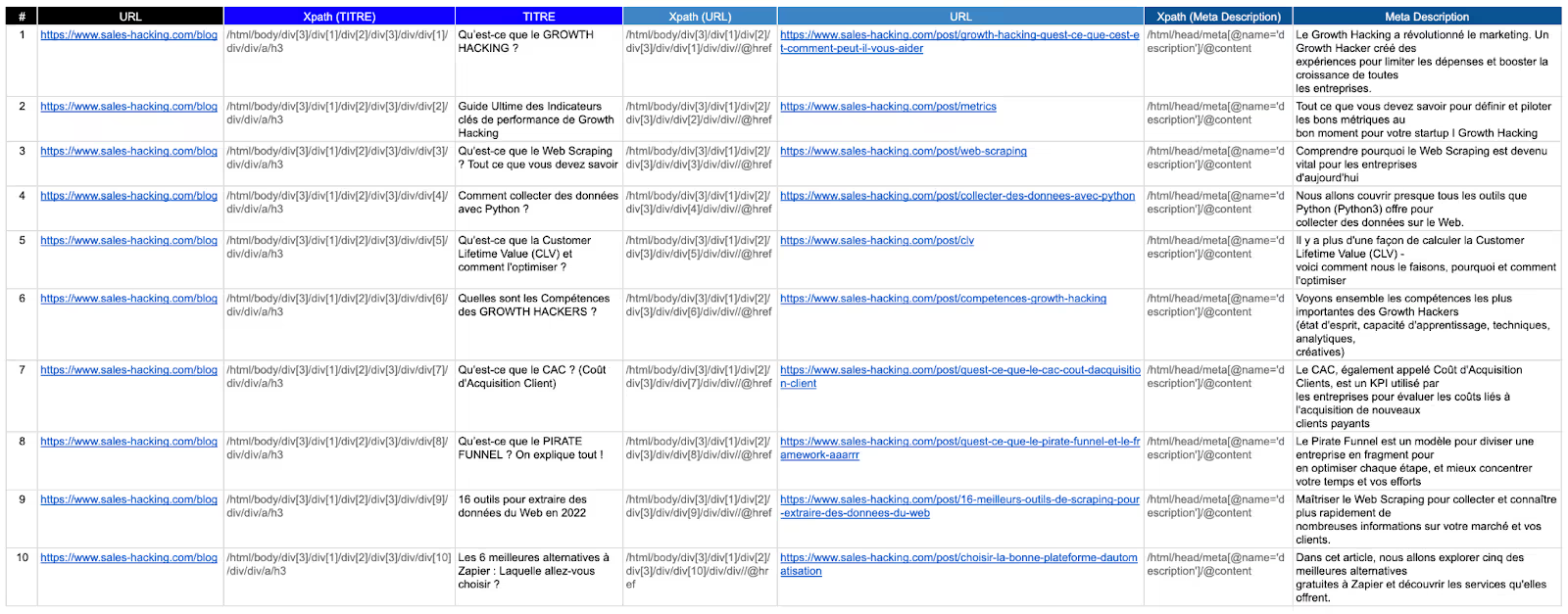

Retrieving blog post URLs

This is what it looks like on the Google Sheets document

As you can see, the list returns all the articles and URLs that are on the page we just extracted.

You can also apply this principle to the extraction of any other piece of information necessary to set up your projects, such as retrieving the meta descriptions of each page with Google Sheets (example can be found in the file below):

Google Sheets example sheet: Data collection with ImportXML

How can I automate the data collection process with IMPORTXML in Google Sheets?

You can automate the data collection process using Google Apps scripts or third-party programming tools like Python.

Scripts can be used to set a data collection schedule, add extracted data to a spreadsheet, and send update notifications.

Other Examples of IMPORTXML formulas

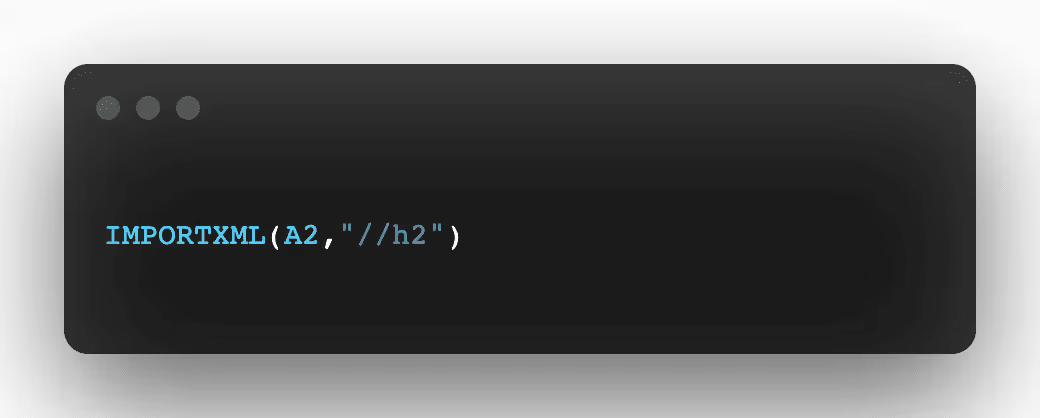

Let's say you want to import the paragraph titles from this Wikipedia article with IMPORTXML from Google Sheets.

<h2>After examining the source code, you can see that the titles are enclosed in tags.

So that's what you need to put in your IMPORTXML formula. In XPath syntax, you should write it as “//h2".

Try the following function where cell A2 contains the article URL:

First, you'll see a “Loading...” sign “, while data import is in progress. Importing from the site takes some time (but it's still faster than doing it manually).

After a few seconds, the function returns what it found on the site with the tags<h2>.

This solution is not perfect because there are additional [edit] texts next to the titles that the site contains, but it does return paragraph titles.

This time, visible text has been imported from the site.

But with the IMPORTXML function, you can import all the data that the HTML source contains. For example, it is very useful to import meta information, link sources, or any other hidden HTML information that is not visible when browsing the site.

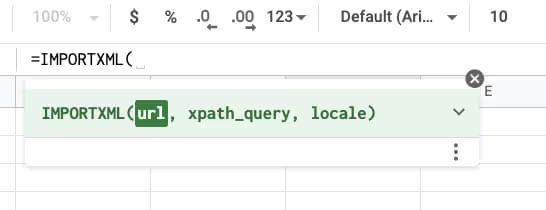

Let's get the links to YouTube videos from the Google privacy policy site.

In this case, the first argument to the IMPORTXML function is the URL of this site.

After looking at the site's source, you may realize that YouTube videos are enclosed in tags <iframe>and</iframe>.

So you need to add //iframe to the function's xPath argument.

Then, you need to go deeper into this tag <iframe>to find the required data that you want to display.

The source of the video link can be found in the src attributes.

Add a slash “/” after the first part of the XPath query followed by the src attribute.

The “@” character indicates that it is an attribute. The whole xpath_query request will be: //iframe/ @src.

And the whole function for importing YouTube links is:

Sometimes it's not so obvious what differentiates HTML from the content you want to import, but XPath allows you to create more complex queries that extract only the data you want.

XPath has some functions that you can include in your requests, such as “contains” or “starts with.”

Let's see how to import all the references (citations) at the bottom of the Google Sheets article from Wikipedia.

<li>After looking at the site source, you can see that the references are enclosed in tags.

But that's not enough because there are a lot of tags <li>on the site.

You need to find something more that differentiates these list items from other parts of the site.

Here is the attribute Id references that all contain the term “cite_note.”

The function Contain of XPath can be used to import only items <li>whose id contains the term 'cite_note'.

That's what the following IMPORTXML function in Google Sheets does:

And it returns all the references that were found on the site with that ID.

What type of data can I collect with IMPORTXML in Google Sheets?

You can collect a variety of data from the website using IMPORTXML, such as prices, product descriptions, contact information, business dates and hours, exchange rates, formatting info, and more.

When should IMPORTXML be used to collect data?

- Extraction of databases from websites or XML feeds (atom xml feeds)

- When you need page data but don't want to use tools

- When you need page data but don't know how to code

Is the use of IMPORTXML to collect data legal and ethical?

In most cases, using IMPORTXML to collect data is legal as long as the data collected is not used for illegal purposes.

However, it is important to respect the terms of use of the sites whose data is collected. In some cases, websites may prohibit the extraction of data for commercial purposes or prohibit the use of automated extraction methods such as IMPORTXML.

Before using IMPORTXML to collect data, it is important to check the terms of use of the site.

READ MORE: List of tools for collecting data on the web

FAQs

What is IMPORTXML in Google Sheets?

IMPORTXML is a function built into Google Sheets that allows you to retrieve XML data from the website and display it in your spreadsheet.

How does IMPORTXML work in Google Sheets?

IMPORTXML works by using XPath expressions to extract data from a web page.

XPath expressions are instructions that tell IMPORTXML where to find the data on the page.

Is IMPORTXML easy for beginners to use?

Although using IMPORTXML may seem complex at first glance, with a bit of practice, the function can be used easily even by beginners.

There are also numerous online resources, such as video tutorials and community support forums, that can help you learn how to use IMPORTXML effectively.

Conclusion

As you can see, IMPORTXML from Google Sheets can be a very powerful feature in your arsenal.

This gives you a fully automated and error-free method for extracting data from (potentially) any web page, whether it's product content and descriptions or e-commerce data such as product price or shipping costs.

At a time when information and data may be the advantage needed to achieve better-than-average results, the ability to collect web page data and structured content in a simple and fast manner can be invaluable.

Additionally, as discussed above, IMPORTXML from Google Sheets can help reduce execution times and the risk of errors.

Furthermore, this Google Sheets feature is not only a great tool that can be used exclusively for PPC tasks.

But it can be really useful in a lot of different projects that require scrapping the web, including SEO and content tasks.

.svg)